The Worst Employee Ever

This is archived from the original post still on the Internet Archive. I was bowled over by the lack of awareness here so I just had to archive it. There are several images in the original blog post that I haven't backed up. However, I did pull down the real whopper - an excerpt from the termination letter - and put that up.

Preface

Hello,

I was employed by Hudson River Trading (HRT).

The company never provided due holidays to UK employees. The issue of holidays was brought up countless times by me and other employees, during the time I worked there. All requests were systematically ignored.

The problem could have been confirmed in 5 minutes of legal review. Looking at the employment contract (28 days) and looking at the holiday allowance in the absence tool, Namely (25 days).

The company simply explained to any employee raising a question about holidays, that they are not entitled to holidays and they’re wrong. When the UK office grew and the issue escalated further, because there is genuinely a problem that remained unaddressed (employees were risking losing their job or their bonus for missing days of work they don’t comprehend), Hudson River Trading responded by firing employees who questioned holidays.

I was fired for requesting holidays for UK employees. Upon termination, the company unilaterally refused to pay notice period, refused to pay non-compete and refused to pay outstanding holidays. I had to bring legal actions against the company, who largely ignored the requests from my lawyers and left me hanging with legal fees, the company required me to sign a very strict settlement agreement in order to get a reference letter and waiver of non-compete, or otherwise to see my career my livelihood and my family shattered. It was made abundantly clear that anybody who reports any concern will meet the same fate as me.

Hudson River Trading has zero regards for the law, their employment contracts, or financial regulations. I can’t comprehend the length they are willing to go, retaliating and silencing employees, just to prevent UK employees from having a few days of holidays. It’s absurd.

This article is a protected disclosure to highlight exceptional serious failures at Hudson River Trading.

I am going to cover my experience with Hudson River Trading in great details. This is a very long read, about 20 pages of text and 20 pages of legal documents. Hold on.

TL;DR

1) Let it be known that Hudson River Trading never granted due holidays to UK employees, the company only granted 25 days off (later 26), ignoring both employment law and their employment contracts which entitled employees to 28 days. The issue has been raised countless times and Hudson River Trading has resorted to terminate and silence employees who spoke up.

2) Let it be known Hudson River Trading does not pay notice period, does not pay non-compete clause, and does not pay outstanding holidays upon termination.

3) Let it be known that Roberta Yuan (HR and legal) and Prashant Lal (partners) are terminating and retaliating against employees who report concerns (aka. protected disclosures).

4) I am formally reporting Roberta Yuan to be formally investigated for exceptional wrongdoings and embezzlement. She runs both HR and legal in the company (extreme conflict of interest). She is using her position and drawing company funds to drive retaliations against employees and cover her own wrongdoings.

5) Prashant Lal is formally signing the papers as the director, which makes him personally responsible and liable for everything. Other than signing, his involvement is unclear.

6) HR and legal functions are compromised. I formally warn employees to NOT raise complaints/grievances, at the risk of their job and their personal safety. In fact the wiki page to raise HR questions is actually giving the lawyer as the point of contact.

7) I encourage current and past employees to come forward and raise concerns and documents directly to the FCA. The FCA can be reached by filling the contact form online https://www.fca.org.uk/contact. Documents and attachments can be provided by email directly to whistle (at) fca.org.uk with Case Number #53063 Hudson River Trading in the object.

Life at Hudson River Trading

First Contact

The first day I was off was 26th December, known as Boxing Day, a bank holiday in the UK.

The 27th of December I was awakened at 5am by HR (or whom I thought to be HR), to check where I was because I did not show up to work the previous day.

From their words, my manager was worried why I wasn’t in office and whether I was alright. He escalated to HR to contact me and check

.

The 26th of December was a bank holiday in the UK. The company was closed. It was formally written in the company policy that the company is closed on that day, see wiki page on Holidays. Oh boy I did not want to face disciplinary actions for being off on a day the company is closed. U_u

Notice that 5 AM London time is midnight in New York time. The employee had to stay all night just to wait to contact me?

Holidays Absence or Absence of Holidays

The next bank holiday was around April. https://www.gov.uk/bank-holidays#england-and-wales

Same thing happened. I was off and the company was closed. Only to come the next morning and face my manager telling me I missed a day of work and he reported me. What?!

I had to explain again to my manager that it’s a bank holiday in the UK. It’s not a work day here. The same pattern repeated many times.

My manager insisted to formally take a day off if I am off, under HR instructions. I had to explain that doesn’t follow my employment contract. I wouldn’t be able to take holidays either way because I don’t have enough days off in Namely, the absence tracking tool. The manager referred to HR to sort out the problem. HR explained that I do not have holidays I thought I had. Well, what they stated did not match my employment contract.

The company only granted 25 days off per year to UK employees in Namely (the absence request tool). However UK employees should have 28 days off in addition to days the company is closed as per their employment contract.

Statutory Rights

Under British law, full time employees are entitled to 28 days off a year, including the bank holidays. (It’s possible to have 8 days other than the 8 bank holidays; the total has to be at least 28 days).

Contractual Rights

Most companies give more than the statutory minimum; it is set in the employment contract. It’s common in London to have between 25-30 days, in addition to bank holidays.

This is page 3 of my employment contract. I’ve asked other employees and they all had the same clause.

The contract means that UK employees are entitled to have 28 days off in addition to days the company is not open for business (typically bank holidays for UK businesses). It’s not clearly defined.

For reference, in British employment law, a “Working day” is formally defined: For the purposes of the Companies Act 2006, in relation to a company, a day that is not a Saturday or Sunday, Christmas Day, Good Friday or any day that is a bank holiday under the Banking and Financial Dealings Act 1971 in the part of the UK where the company. (For reference, there’s always 8 bank holidays a year and they are fall on Monday to Friday). https://uk.practicallaw.thomsonreuters.com/3-381-0053

The firm had its own definition, that was shifting and unclear.

Holidays Absence or Absence of Holidays

I lost count how many times I found myself confronted to Human Resources and/or my manager for planning to be absent or for being absent on a closed day.

Hudson River Trading only granted 25 days off and expected employees to deduct bank holidays from that.

- Under British employment law, employees are entitled to 28 days off per year (counting in the 8 bank holidays). Employees are given less than the statutory minimum (25 minus some bank holidays). It’s a breach of employment law.

- Under employment contract, employees are entitled to 28 days off per year + days the office is closed. Employees are given less than 28. It’s a breach of contract.

I very specifically raised the request to Human Resources to review their UK employment contracts and correct the holiday entitlement to 28 days, multiple times, for myself and other UK employees. The request was always ignored. Sometimes they’d say they’d look into it and come back, but there was no follow up.

This was a general issue affecting ALL UK employees of Hudson River Trading. There had been countless complaints reported and it’s never been actioned. The issue had been ongoing for years I’ve been told, before I worked for the company.

In US English, this was ground for class action lawsuit against the company from every UK based employee against Hudson River Trading… if only class actions were a thing in the UK.

Unilateral Deduction

At some point end of April I noticed an automated email from the absence tracking tool Namely, a request had been submitted on my behalf to deduct a day of. Then much later a second email, the request had been approved.

I wasn’t informed that a day has been deducted except for the automated notification. I do not know who took the initiative to do that or who approved or why.

This was reducing the holiday entitlement further away from the statutory minimum, to a point where employees won’t have enough days even when taking most bank holidays off (which the company expect you to work). Shouldn’t do that.

Contractual Failure

Hudson River Trading doesn’t follow the contract or the law. What are they doing then?

One of the root causes of the holidays issue was the employment contract (or at least that’s what I thought at the time). A normal employment contract should state how many days are off, as in “The employee is entitled to X days off per year in addition to bank holidays” (most tech companies in London have X between 25 and 30).

The contract says employees are entitled 28 days off in addition to “days the company is not opened for business”. What are those? Nobody knows. The contract of employment for Hudson River Trading is unclear. It’s a notable mistake on the part of whoever drew and reviewed the contract.

The company tried to define closed days in a wiki, the wiki was unclear and it kept being modified (which is unlawful, it’s a contract and it cannot be modified unilaterally like a wiki).

Employees should have had 28 days off, the company only granted 25 days off. The company wanted to deduct (some) days the office is closed; however they have to be in addition as per the employment contract.

I raised these issues specifically I don’t know how many times. I’ve asked HR to formally review the contract with a lawyer. It should take 5 minutes to look at the contract and the Namely allowance, to realize that employees have never been granted due holidays. The lawyer should be able to work out what closed days is supposed to mean (and flag the lack of clarity as a problem).

One time I brought the issue of holidays to HR, she replied that she can adjust the policy, a wiki, to delete the extra holidays we’re not supposed to have (the company really doesn’t want employees to have days off). I had to explain that the company cannot delete holidays unilaterally. Employment terms form a contract in the UK and they cannot be edited unilaterally. This applies to holidays and most internal policies, they’re considered to be part of the employment contract in the UK.

What To Do?

The same pattern repeated over and over.

– Before or after a holiday, my manager would tell me I shouldn’t be off.

– I’d have to explain that the day is off because it’s written in my employment contract. And I can’t take the day off even if he wanted me to because I don’t have enough holidays allowance in Namely.

– My managed would report me to HR to sort out the problem of holidays, to who I would repeat the issue and ask for the holiday allowance and/or the contract to be corrected.

– HR would systematically dismiss the request and say there’s no holiday. Though once in a while, they’d say they’d look into it and come back to me. They never did!

– I was bleeding to death from the fear of losing half of my compensation or my job, at any minute.

April-May-June was supposed to be a chill period covered with bank holidays in the UK. Instead every single week was problematic and a source of unwarranted disciplinary actions for employees. Nobody understands what days employees are supposed to work or not.

Employee might be fired over this, to no fault of their own. Employees might face half of their compensation cut off suddenly. Hudson River Trading is a financial company with a large part of the compensation paid as discretionary bonus, confrontation is not how to increase bonus. Your mileage may vary, whether you try to take any day off and whether your manager or HR decide you cannot have that day. It’s quite inconsistent of course because nobody understands holidays.

There were new joiners in my team in April. They were supposed to start on a bank holidays. Had to explain that there won’t be people in the UK to welcome them because it’s a holidays, got directed to HR to sort out the problem, who insisted there are no holidays and we’re expected to work. New joiners thought they would have another day instead and they will have other bank holidays, well, nope. Expect a shock for employees coming from other companies and losing 5-10 days off compared to their previous role. Had to warn new joiners and bring them up to speed slowly about the company. The guy before me disappeared under very questionable circumstances. I might be next at this rate. Any of us might be next.

The general advice for developers in bad situation is to immediately leave. It’s almost became a meme on the internet, just quit. Well, it’s not that simple, notably because of claw-back clauses and non-compete.

Claw Back Clauses

I’ve looked at my options to leave the company. It is not possible to leave the company.

The employment contract has claw back clauses for 12 months, that’s pretty long. To leave the company an employee must repay their sign-on bonus if there was one (I had one) and part of their compensation. Assuming the clauses are enforceable, vary with circumstances.

I estimated that if I were to leave the company, either willingly or unwillingly, I’d have to (re)pay the company about £50k. The amount is increasing each month.

(Well, I estimated wrong but I only realized that months later, the claw back only applies to 4.1.b about the sign-on bonus, it doesn’t cover the discretionary compensation in 4.1.c, I missed the small (b) on the first reads. That’s really unfortunate for me. )

The company might try to claim a lot of money back in the event that they terminate employees. It was very scary. It made the problem of holidays much worse, waiting to explode in the face of the company, there is no easy way to leave willingly or unwillingly.

See contract section 4.2 for claw back and section 14.

Non-Competition Clause

Of course to leave, one must first get a job. It’s really difficult to get an interview, let alone a job. Financial companies are very few and very selective.

The abuse of non-compete clauses is rampant in the industry to prevent employees from moving. Other companies will simply not interview or cancel later stages because of non-compete. Non-compete clauses are an enormous problem to change job in the UK and they are considered very enforceable.

The employment contract with Hudson River Trading had a stringent non-compete for 6 months.

Between the non-compete and the claw-back clauses, it’s really difficult to leave Hudson River Trading. Prospective employees should be warned that when they join Hudson River Trading, there’s no leaving.

I thought, if something went horribly wrong (and things are not going great because of the holidays problem) the company will at least have to pay the non-compete for 6 months and I won’t be left to starve. Let it be known that Hudson River Trading unilaterally cancelled their non-compete.

Haemorrhaging Employees

I found out that the way my body reacts to extreme stress is to bleed. My nose just starts bleeding anytime for seemingly no reason. It’s stress!

It came to a point where I was bleeding 3 or 4 times a day, every day. This was my body warning me of life-threatening conditions. I’ve never been confronted to stress so extreme in my life than when I was working at Hudson River Trading. The worst is that there’s no way out between the claw back clauses and non-compete.

I had to turn off my webcam during most meetings because of the bleeding. If other employees noticed I stopped showing myself, I’m sorry, you should know that it’s because I was dying, I had to cover the blood.

Maybe I should have taken a doctor’s note and get off-sick, but how to get time off-sick when the problem that makes you sick is that you cannot get time off???

HR Announcement

After months of raising grievances, HR finally decided to take action to resolve the issue, by unilaterally reducing holidays. Wait, what?

Notice the first line, confirming that multiple employees have been raising issues for a while.

This email modified the holiday entitlement from 25 days off to 26 days off and made Boxing Day (26th December) a working day. (It might not be clear but that’s what happened after this email).

It effectively removed one day off (employee should have 28 days entitlement + closed days like Boxing days).

There is a wiki page, for the official company policy, specifying 26th as a day off, the wiki was edited out to remove the day. It’s unlawful, the company cannot unilaterally deduct and change holidays. This is part of employment contract and cannot be edited unilaterally. (Not that you could get away easily with the 26th anyway, I was off the last 26th December and was awakened the next day at 5 AM for not showing up to the office).

That announcement did not help. It was still unclear what days we are supposed to work or not this year. It did not match the employment contract. It did not grant statutory holidays. There were many more bank holidays coming up in May and June and it’s not clear when employees are supposed to work.

I can’t comprehend how did this announcement ever passed 5 minutes of legal review? It’s unlawful. It doesn’t address the problem.

Had to go back to HR once again to report all the points above and try to clarify holidays. The HR lady looked legitimately surprised that she cannot unilaterally cut holidays like that and said she would get back to me. There was no follow up.

Escalation

Nothing happened despite more grievances. I could not go on like that not knowing when to work and constantly being under afraid, there was a genuine major issue, that was leading to get employees fired or bonus cut or lawsuit or worse.

I escalated the issue to the partners with UK employees in copy.

I received a reply from Jason, who’d said he’d personally look into the matter. No idea who Jason was but he seemed important. (Remember the name, it will come up later).

At about the same time, I was contacted by another individual to get me into a meeting, this was lead HR and my manager’s manager informing me I am suspended until they investigate the matter. I insisted again that the issue is real and if they took 5 minutes to show the employment contract and the allowance to any lawyer or the lawful, the company would be immediately told that they forgot to grant holidays to UK employees.

My laptop was remotely shutdown and wiped right after the meeting. (They told they’d cut access while investigating).

Was that really necessary to review a contract?

Explosion

I was invited to join a phone call few days later, with lead HR (or whom I believed to be the lead HR) and my manager’s manager. Couldn’t even use my work laptop to join the meeting because the laptop had been murdered.

Oh boy I was naive to think that they would look into the matter.

Lead HR told me that I am fired effective immediately.

(☉_☉) That’s legally not possible, it is unlawful to terminate employee after making a protected disclosure, they can’t terminate me now.

HR informed me, precisely, that I am not being fired, the company is simply breaking my probation period, because I am still in probation period and I failed for poor performance.

(☉_☉) I am in utter shock and silent. I am NOT in probationary period or under a PIP. There is no issue with my performance. In fact my last conversation with my manager was that I am doing great and I will see my bonus increasing this quarter. The company is just making up reasons that do not exist.

HR repeated that I am terminated for performance issue. The manager added that I should never have sent this email to the partners, this is extremely inappropriate to escalate the issue like that and he can’t keep me on the team.

(☉_☉) Silence. Notice how HR is trying to make up non-existent issues about performance while the manager is formally telling me that I am being fired for making a protected disclosure. The reason for the termination is confirmed. I am now officially a whistle-blower. (That sucks, I don’t want to be a whistle-blower, what I wanted was my laptop unlocked, holiday allowance corrected to 28 days, and get back to work)

HR explained that the company is not paying the non-compete, the company is paying me 1 week to cover the notice period, but as an exceptional gesture the company is generously giving me 1 month of pay as severance to cover the troubles and the time to find a new job.

(☉_☉) There is no word for how shocked I am. The company cannot unilaterally decide to cancel the non-compete like that. I always thought if something went horribly wrong I would get the 6 months of non-compete as severance as a last resort. Nope. Hudson River Trading is unilaterally cancelling it. The notice period is actually 1 month. The company is refusing to pay the notice period and offering me to shut up for not paying my notice period. These people are insane. And they really think 1 month is enough to find a job?

I asked if they looked into holidays and if they will adjust the holidays for UK employees? and reviewed the employment contracts as requested?

HR responded that I am wrong and they will do nothing about holidays. Employees do not need more holidays.

(☉_☉) It’s extremely clear that the company hasn’t investigated anything. My access was terminated specifically to prevent me from contacting other employees and my laptop was wiped remotely in a deliberate attempt to destroy any evidence I may have had.

I insisted it’s not possible. If they had shown the employment contract to any lawyer in the UK and the holiday entitlement in namely (25 days before announcement or 26 after) and the employment contract (28 days expected), it would be pointed out immediately that the company has not granted due holidays to any UK employee.

HR insisted again that I am wrong about everything, HR added that non-disclosure clauses from my employment contract are in effect and if I talk about this matter anywhere to anyone, this is a breach of my confidentiality clause and she will pursue me on behalf of the company.

(☉_☉) Holy shit. She’s lying straight to me face about my rights and she’s plainly threatening to personally pursue me. For reference, I was just fired in retaliation for making a protected disclosure for the benefit of all UK employees, under British law all confidentiality clauses are waived and I am permitted to make a public disclosure. HR should be aware of that. (The lawyer would have pointed that too if they consulted one about holidays!) https://www.legislation.gov.uk/ukpga/1996/18/part/IVA

I asked who is the legal contact for the company? I require the legal contact in order to bring legal action against the company. I am getting a lawyer, the company will be contacted by my lawyer.

HR replied herself. The HR person who fires employees is also the legal contact. (Odd?)

I received my formal termination letter shortly afterwards.

A Personal Note

There’s something that’s really difficult to capture in text. It how problems were constantly created out of thin air and escalated out of control at Hudson River Trading (and not just about holidays).

- The first time you’re awakened at 5 am for being absent on a bank holidays. It’s painful, but it’s accidental, right? US folks don’t pay attention to UK holidays or time zones.

- The third time you’re confronted by your manager or HR, it’s not accident, there’s really a problem. I checked with other UK employees and UK employees really cannot take holidays. I’ve worked for international companies; it’s a mess when they open new offices abroad, lots of little things to sort out. The issue here is not out of the ordinary, just need to adjust some holiday’s numbers and contracts.

- Then suddenly you’re fired on the spot, your access is terminated on the spot and your laptop is remotely wiped in a deliberate attempt to destroy any evidence. The company refuses to pay your notice period and openly threatens you.

For me, there was sadly never an option to ignore the problem. My manager is a developer turned manager who is possibly the most pedantic person I’ve ever worked with. If I was (thinking of) missing any day or doubting any policy, it was systematically reported, like a bug. He actually wanted me to address the issue for other employees and new joiners of the team, which is fair, however it cannot be done if the company is not willing. It was a death march. It was bound to end up with the issue resolved or the team eliminated.

Lawyer Up

Time to get the lawyers involved. Not like there was a choice. The company refused to pay the notice period or the non-compete or to offer any severance.

ACAS

First thing was to call ACAS and explain to get advice. https://www.acas.org.uk/contact

They replied the basics:

- What you made was a protected disclosure.

- The company terminated you following the protected disclosure.

- Under British law, most protections for full time employees do not apply until 2 years of service, however if you are terminated for making a protected disclosure about a statutory right, which you were, protections apply regardless of time of service and you can sue for unfair dismissal.

- There is no cap to the level of compensation (we’re talking UK, not US, the compensation is peanuts anyway)

- You must get a lawyer ASAP.

- There’s only 3 months to bring a claim to the employment tribunal, starting from the moment you were terminated.

On Normal Abnormal Termination

Let’s cover how a normal “abnormal” termination was supposed to happen. When the company terminates an employee, the company should offer a severance package and a settlement agreement.

- reference letter of employment

- non-competition pay AND/OR formal non-competition waiver

- holidays pay

- notice pay

- severance pay

- payslips (separately later)

Note: I ought to include payslips and tax forms last. They don’t go into the settlement agreement but better make sure that they are provided to employees soon enough and that the amounts due are paid. Without the document above, including payslips and letter of reference and non-compete, there are major troubles to move on.

Hudson River Trading didn’t do any single item out of that. Not a single one. In fact, from their perspective they simply broke my probation period (I wasn’t on probation).

The settlement agreement goes to layers for revision. The employee and the employer each gets a lawyer. You have to find a lawyer, who will get back to the company and negotiate the terms. Law firms usually offer a standard package to negotiate the terms of a settlement agreement.

In the UK, there is a time limit of 3 months from the date of termination to sue the company in employment tribunal. It’s really short. The employee has to decide what to do, quick, the timer is running.

Without anything on the table, I had to pay a lawyer thousands of pounds just to start the process. I had to pay the full legal fees myself because Hudson River Trading did not do their job. And they will refuse to cover legal fees that they have incurred on me.

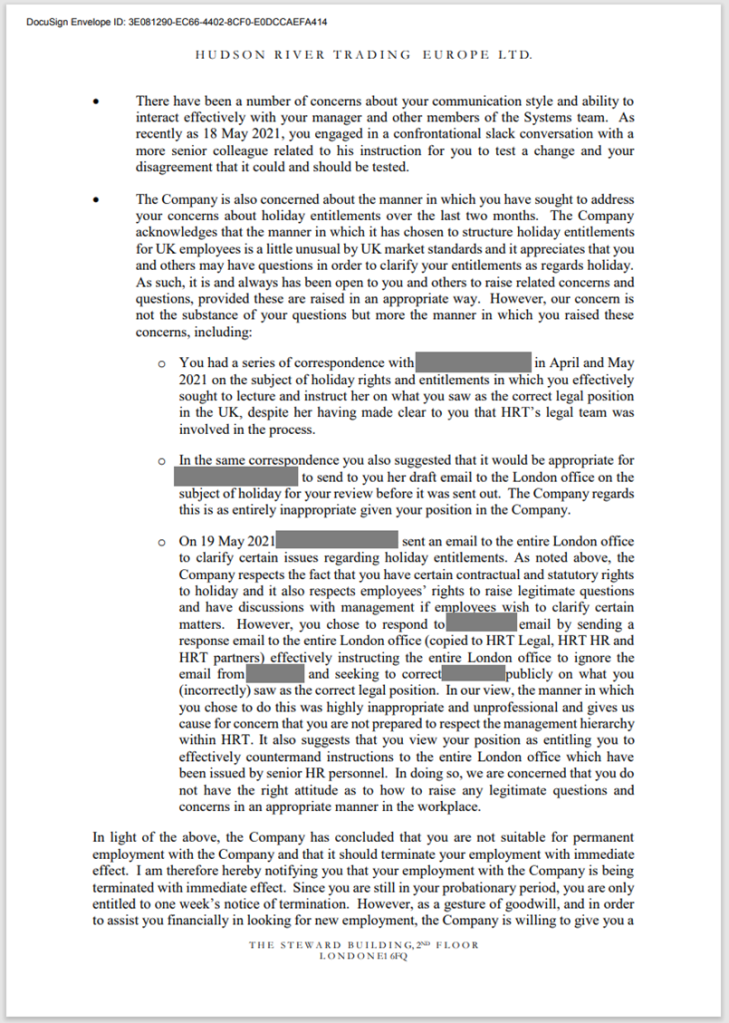

Termination Letter

Here comes the termination letter.

The first page stated what was said during the call.

- The company is breaking my probation period; I was not in probation period.

- The company is giving me 1 week of pay to cover the 1 week notice; the notice period is actually 1 month.

- The company is terminating me for poor performance and claiming I am under a PIP; there is no issue with my performance and there was no PIP.

- The company is not paying the non-compete clause, unilaterally, and did not provide a formal waiver of non-compete to forward to prospective employers.

- The company is firing me for making a protected disclosure; yes, it’s explained in writing

What’s interesting is the page 2, see below. It acknowledges in writing that there had been concerns reported about holidays for months, which remained unresolved. I resorted to making protected disclosure to partners and UK employees hoping to get the issue addressed. That made some individuals unable to cover their wrongdoing and they elected to terminate me.

This page alone should be enough to prove wrongdoing and retaliation from Hudson River Trading. That page alone should be enough for the company to terminate anybody who initiated that. If the document is escalated to regulators like FCA or SEC, there may be grounds to start an investigation and distribute fines.

The termination letter is signed by Prashant Lal, one of the partners. See page below. I’ve seen him few minutes during one induction for new joiners and seen him giving end of quarter presentations. Gotta admit, he did not strike me as a guy who would move heaven and earth to fire employees for few days of holidays.

Did Prashant actually read and sign this document for real? If yes, was Prashant explained the nature of this document, specifically meant to retaliate and silence employees making a legitimate protected disclosure. Was he explained that by signing this unlawful document he is personally liable and exposed to actions before courts and regulators?

On HR Announcement

I complained many times that the company doesn’t grant holidays and the HR announcement was unlawful.

Letter from my lawyer to the company, regarding the announcement and following termination:

Behold! The company doesn’t grant holidays and the HR announcement was unlawful.

That was determined in the first hour of meeting my lawyer, in the first minutes really, after being provided the employment contract and HR announcement.

Why was the company unable to figure that out in spite of getting recurring complaints about holidays for months?

On Holidays

What holidays are employees supposed to get? I’ve been trying to determine that for a long time.

As of 1st February 2021, the answer was 28 days in addition to the following days which were the closed days defined in a wiki:

- Friday January 1st 2021, New Year Day

- Friday April 2th 2021, Good Friday

- Monday April 5th 2021, Easter Monday

- Monday December 27th 2021, Christmas Day

(It got pretty hairy if one wants to look at the history, not that it matters. Employees were never granted 28 days off. The wiki specifying these days was edited to remove Christmas Day, which was unlawful. There was another wiki for the previous year, which was deleted, and showed something else.)

On Non-Competition

The non-competition is an enormous problem. It was practically impossible for me to leave the company willingly when I was employed, because of the non-compete and claw back clauses. (Not that I wanted to leave, I actually liked my work, I wanted to stay and keep my coworkers, which is exactly why I was trying to get holidays sorted out.)

It was extremely challenging to apply for positions after termination because of the non-competition clauses.

I tried to get some interviews with financial companies, which is incredibly difficult with how few and how selective companies are these days (note that the UK is a fraction of NYC finance job pool). They systematically asked if I had a non-compete and how long? They made a whole round of HR interviews just about that! It was horrifying.

I was falling apart and crying inside in interviews. I DON’T KNOW. I don’t know if I have a non-compete, what’s the status of the non-compete when the company illegally terminated me and refused to pay the non-compete and I am pursing legal action against the company to get it? Nobody knows. (Of course you can’t say that in an interview).

It was inhuman how much Hudson River Trading and their non-compete and their catastrophic handling of contractual matters is destroying my life and my career. And they did not bother to provide a formal waiver of non-compete when they decided to waive it unilaterally so I can’t show prospective employers that I do not have a non-compete. If a prospective employer asks to check my employment contract, which is a possibility for trading companies, they will see that I can’t work for them due to non-compete.

I wish I could say it’s unlawful to unilaterally cancel but it’s not that simple. There’s little general rule about non-compete in the UK, non-compete cases are decided on a case-by-case basis. Typically the non-compete will be considered by the court when deciding compensation.

As an employee my only recourse was to formally sue Hudson River Trading in court to enforce the non-compete against the company. It’s horrifying how non-compete are one-sided in the UK.

And there was a lot of money and details behind the non-compete, 6 month of salary, making it very important. Ideally this requires a lawyer who understands non-compete (who will say that it is ruled on a case-by-case-basis) as well as protected disclosures as well as financial regulations as well as general employment.

About Performance

The company claimed there were performance issue with me, it is false.

- In my last weekly meeting with my manager on a Wednesday, my manager informed I was doing great and my bonus would increase this quarter.

- On the following Monday I was terminated by HR for issues with my performance. It’s non-sense. There are zero issues with my performance.

The company claimed that I was under a performance improvement plan. It’s false. I was not.

There is something though that I ought to cover though. My very first week-on call there was an alert and an outage of the compute grid. Due to a bug that’s always been present, it just happened to trigger the first week I was on-call and took about an hour to resolve. The following Monday my manager asked me to join a video call. Had to wait a little while because I was in a meeting, can’t be everywhere. Unbeknownst to me, this was HR and my manager waiting, to inform me that I am being put on a performance improvement plan, following poor handling of the on-call issue, then HR immediately left because she had other events to attend to. (They couldn’t bother to arrange a time where people are free and make sure every is aware they need to be present?).

An email was sent to me afterward. I formally replied to contest placing new employees on a PIP on their first week on-call and asked for training and asked to change manager rather than getting fired.

I talked to manager and HR separately the next day. I was deadly afraid to be fired. They each separately assured me they are not planning to fire anyone, it’s a very normal procedure followed with numerous employees and they rarely fired people. (Understand why I am bleeding to my own death now? The combination of the company constantly pursuing people with no reason in combination with holidays issue and claw back clauses and non compete clauses, it’s horrifying and there’s no way out)

There was never any follow up of any sort to that event. It was a one-time rant. (HR actually did not realize performance improvement plan had a specific meaning in the UK, this isolated event wasn’t one. https://landaulaw.co.uk/performance-at-work/ )

I talked to some coworkers about it. They warned me that this sort of circus happened before. The person before me disappeared under questionable circumstances; it was all extremely worrying for everyone. My role when there will be new joiners, soon, will be to warn them and ensure that they do not get canned by accident, just like other people warned me.

When Hudson River Trading started firing UK employees to cover up the holiday issue, Hudson River Trading tried to justify my termination by claiming that I was under a PIP for repeated poor performance and claimed that automatically extended my probation period. Well. It’s false and it’s unlawful. There is no performance issue. There is no PIP (that absurd rant that was never followed up isn’t one). The probation cannot be extended unilaterally without ever informing employees, if it can be extended at all.

Payslips and GDPR

There was an enormous problem after being terminated. I didn’t have payslips. (The company paid, it just did not send payslips). I was left unable to get any payslips.

There was a system to download payslips from, I tried to access it but I couldn’t access any of my payslip anymore. Access was terminated as part of the company shutting down all access?

I had to send a GDPR request to Hudson River Trading to request information, screenshot below. The company has 30 days to process the request.

The first line about HR profile was supposed to include payroll and tax forms, though that may not be clear. Communications are going through lawyers; it’s formal, slow and costing a fortune for each message.

Behold! The company simply ignored the GDPR request. No reply. Nothing at all.

It was an enormous problem because I need these documents to ask for any missing payment and to sue Hudson River Trading on various grounds. There is a 90 days delay to start legal action against the company and that required figuring missing payment and having these documents as soon as possible. The company not processing GDPR request timely or at all, was depriving me of the ability to seek legal recourse and to report wrongdoing to regulators. Also, it’s a breach of GDPR regulations.

The request got nowhere at all. Hudson River Trading will never provide anything because they know that all their communications and documents are damning evidence of illegal activities. Hudson River Trading was probably playing the watch too, because I might just miss the deadline for the employment tribunal if they stall forever.

The only thing the request achieved was the company openly threatening me. The company put a line into the later settlement agreement (which I had no choice but to sign) to retroactively cancel my GDPR request and prohibit me from sending any more GDPR request. If I send any request for any information of any sort, I will be immediately punished and requested to pay the company £35000 in damages (the sum referred in clause 3.2 is £35 000). See screenshot below, section 7.6 and 7.7.a.

They company also tried to waive my rights to make a subject access request or similar. See clauses 6.1 waiver of claim (see the settlement later in this article).

Was that legal? Can the company refuse to process GDPR requests and retaliate with punitive damages on the requester? Can the company waive rights to make GDPR requests?

There was supposed to be a data protection officer in the company, don’t know who it is, were they informed about the request and the retaliation?

The GDPR being fairly new, there is little information about what to do or the risks. I filled a report to the ICO, don’t see a case number. Sadly they are overwhelmed and only a tiny fraction of requests are processed. It’s unlikely it will ever be processed. https://ico.org.uk/global/contact-us/

Holiday Pay

I eventually managed to payslips after a while. That was difficult.

The company paid the notice period in full, after the notice from my lawyer that they are required by law to pay the notice. The company DID NOT pay outstanding holidays. There are thousands of pounds missing one way or another.

That took months to find out (took forever to get the payslips and the payment) and it’s seriously approaching the deadline for the tribunal. It’s bad.

For the avoidance of doubt, there may be debate about what holidays are due because holidays are a problem, that’s not the issue I am talking about there. It’s possible to count a few days more or less to calculate holidays pay. No matter the scenario, there are thousands of pounds missing. The company really did NOT paid outstanding holidays.

Had to send back a request through my lawyer for the company to pay outstanding holidays, there was a few backs and forth with the company lawyer to establish the amount. Then the company simply declined to pay, stating they “do not agree that holiday pay should be calculated in the manner that your client [me] suggested”.

What?!

It was not “my position” on calculating holidays. This was a legal request sent by my lawyer that’s costing me about £400 per email and that’s going to the lawyer of the company for review that’s costing I don’t know how much to the company. It took quite a bit of back and forth to establish the breakdown for the very specific circumstances here and with the text of laws given as reference.

The company is represented by counsel from Shearman and Sterling, a well-known silver circle law firm, that’s expensive and known to represent high profile financial firms.

Well, Hudson River Trading did not believe in their legal representations or in their obligations to pay outstanding holidays. (It’s consistent; the company did not believe in paying notice period or non-compete either!)

Seriously, are there actual people on the end of Hudson River Trading to process legal requests?

Pensions

Yet another potential problem: Pensions.

Companies in the UK must provide a pension scheme and on board employees after they join.

With Hudson River Trading, I had some forms to fill and a zoom call with HR to cover benefits and pension, around the time I joined. Hudson River Trading made pensions opt-in and they discouraged employees to opt-in to the pension. That was an interesting call to say the least (good luck proving that in court!).

Took me a little while to find and get access to the pension:

- Hudson River Trading paid the statutory amount, about £291 a month.

- It took 115 days between the moment I joined the company and enrolment email from the pension.

- That’s about 3 months of no pension contributions. No back pay.

There were legitimate questions about pensions. The company ought to make sure that employees are enrolled automatically and timely and NOT discourage employees to enroll. I think there is a legal delay of 90 days to on board or as soon as requested. How many employees missed the registration to the pension?

I wanted to bring this up formally but I was never able to, a few hours of lawyer is more expensive than the pension. At this stage, Hudson River Trading had made it pretty clear that they refuse to pay anything or refund legal fees they incurred on me.

It’s a good illustration of the catastrophic problem when dealing with Hudson River Trading. Every single thing done by the company is questionable when not outright unlawful. It’s reached a ridiculous point where it’s impossible to seek remediation because there’s too much to cover. Any employee who finds themselves in troubles will end up financially bankrupt in an endless list of claims, assuming one could get some lawyer(s) in the first place. It’s difficult to find legal representation, lawyers are specialized in a narrow field of law and the company is touching ten different things in a very complex case.

Final Settlement

Didn’t get anywhere reasonable. The company refused to pay outstanding holidays, refused to compensate for the non-compete, refused to refund legal fees they incurred upon me; the company plainly ignored the GDPR request.

The company simply considered that they’ve done nothing wrong; the company is in total denial about the existence of the law or the existence of contractual obligations or the existence of regulations.

That was two months in. There was a legal delay of 3 months to sue the company in employment court. Don’t know whether Hudson River Trading was playing the watch willingly or accidentally but that’s where things were. There is no money to cover going to courts and the company has made it abundantly clear that they will sabotage the process. I need a reference letter and waiver of non-compete NOW because it’s being an enormous problem to interview.

I was presented with a settlement agreement that gave me nothing. It was pages over pages of putting extreme liabilities over me in order to get a reference letter and a formal waiver of non-compete. I don’t think I’ve ever seen a contract in my life that was so one-sided. I can’t believe how they are shifting all the liabilities upon me, to cover their wrongdoings.

I cried for days.

I’ve tried to get interviews with some companies. I’ve had interview processes starting with a formal half hour phone call with HR to talk about the role and very specifically inquire about any non-compete obligations I may have. I want to cry. I will not be able to get a new job, not without the reference and waiver of non-compete. Background checks in finance go back 10 years; I will have to go back to Hudson River Trading to beg for a reference over the next 10 years, or risk watching my career burst in flame and go starving. The situation is catastrophic.

Sign or die.

Note: I consider that this contract was signed under duress and unenforceable. It was specifically meant to cover up wrongdoings from specific individuals, who are actively threatening me and using this contract as a mean to silence me.

See the settlement agreement.

Settlement

Recognize the extreme waivers, followed by extremely strict NDA followed by extreme retaliation clauses all backed with extreme warranties and retaliation clauses. If I break any of these conditions, I will have to pay the company £35,000 immediately upon request, at any point in my life, or other worse punishment. Of course the conditions are impossible to satisfy.

See clauses 6.1 waiver that specifically named Roberta Yuan and Prashant Lal as off limits. Somehow the individuals involved got themselves off limits, they cannot be pursued by any means, if I intend any actions of any nature against them, including going to court or regulators, and they will personally pursue me and request me to pay damages. It’s waiving right to make a GDPR request too. I do not agree with any of that at all. I do not believe that this is lawful either.

See clause 8 about confidentiality obligations. It’s void and only intended to scare me (and it really does because the company has no regard for the law). Under British law, all confidentiality clauses are waived when wrongdoing is involved.

See clause 7 about warranties. An enormous list of warranties that cannot possibly be provided but if I don’t bend over and give willingly consent to be raped, the company will destroy my career and my livelihood.

It’s inhuman. I disagree with all these pages. I was so afraid when I received the documents from Hudson River Trading. I was asking them to offer some compensation and provide reference letter and waiver of non-compete, without which I cannot work again. The document they provided turned out to be pages over pages of legalese to kill me and my family whenever they wish to in the future. No human being should ever have to sign anything like this.

FCA violations

For reference, there were a few back and forth with the company. The corrections I managed to get from Hudson River Trading was to get a 10 year time limit on the non-disclosure agreement with an exclusion for “immediate family” (which doesn’t matter because confidentiality obligations are void) and remove some warranties clauses, screenshot below (which doesn’t matter because it’s void too but I was not aware of that).

Below, please find the original warranty section that was sent to me and I negotiated to have removed. I was scared to death because it’s impossible to satisfy. (Remember for example that the company does not honor their obligations toward pensions which I am unable to inform them because they refuse to cover legal fees required to inform them). I had no idea it was null and in violation of financial regulations.

See FCA handbook section 18.5.3 about making settlement agreements. “Firms must not request that workers enter into warranties which require them to disclose to the firm that: (a) they have made a protected disclosure; or (b) they know of no information which could form the basis of a protected disclosure.” https://www.handbook.fca.org.uk/handbook/SYSC/18/5.html

Post Settlement

There was one final email from Hudson River Trading on or about the time to conclude the settlement. They wanted to confirm that I have formally handed over and destroyed any [incriminating] documents I may have.

That was plainly asking for destruction of evidence which is required by courts and regulators. It was unlawful and I could not reasonably reply or confirm that request. It was in breach of FCA handbook for preventing employees from making a protected disclosure. It was potentially a federal crime to ask for deletion of evidence if the evidence covered a federal crime (didn’t know at the time but embezzlement and fraud are very much on the table).

I should have kept the email to be able to publish it, but I couldn’t. I cannot look at it. I and my partner just cried. Couldn’t sleep. Had to delete it. It’s inhuman was the company is doing.

Hudson River is simultaneously ordering me to destroy critical evidence and building a paper trail for (not) doing it to pursue me later. I am trapped either way. The company asked me to be consent-in-writing to be raped otherwise the settlement is off the table and they destroy my career and take away my roof (no reference letter and no waiver of non-compete); either way they reserve the right to pursue me and take away anything I have at any future moment of their choosing. 😦

The email went hand in hand with Clause 9.

Prashant Lal

The settlement agreement is signed by Prashant Lal, one of the partners. See page below.

Did Prashant actually read and sign this document for real? (The digital signature from DocuSign confirms yes)

If yes, was Prashant explained the nature of this document? Was he explained that by signing this unlawful document he is personally liable and exposed to actions before courts and regulators?

The legal communications (letters from my lawyers and GDPR requests) were exclusively sent to Roberta Yuan (or to the law firm) who terminated me and self-elevated to the only point of contact for the company. No idea if anybody else has a copy of any documents.

(I note that I willingly refrained from trying to contact employees because the company is retaliating against anybody who gets involved and I do not want to endanger other employees, it is not safe!).

There was supposed to be a financial regulator in the company representing the FCA and SEC, I think a lady from northern Europe, was she informed and provided with a copy of the documents above? Forgot the name and did not have any contact information.

I’ve many times wanted to contact Prashant to talk to him personally, trying to deescalate the situation, maybe try to reduce legal fees and get a more positive outcome for both sides. Maybe confirm and warn him about some stuff, there is a real possibility the signatures were forged or that he was unwittingly talked into signing these documents. Does he comprehend the liability this personally exposes him to? Would a director sign any document like that for no benefit to them? Would a director willingly sign a document that exposes the company to enormous fines and counter action from regulators?

Maybe the ultimately irony is that this agreement formally prohibits me to contact Prashant Lal or anyone. The only recourse left open is to make a public disclosure to the general public.

The company was very successful in isolated me and preventing any contact with employees. The sudden destruction of the work laptop and the sudden termination while pretending to investigate the matter were terribly effective in isolating me and destroying evidences.

Fear and Wonder

It’s really puzzling the length the company is willing to, just to avoid giving a few holidays that employees are entitled to. It was not an accident. The company is fundamentally opposed to granting holidays. And it is committed and willing to destroy life over it. What happened to me will happen to the next person.

What will happen next when Hudson River Trading will extend to other locations in Singapore, Tokyo, continental Europe… Hudson River Trading will fire and threaten all employees there for raising any questions about local laws or customs?

It’s absurd.

Part IV: Revelations

There was a news article published in Bloomberg some time later, that highlighted critical new information that I wasn’t aware of. https://www.bloomberg.com/news/articles/2021-06-24/prop-trader-hudson-river-reaps-1-billion-in-frenzied-quarter

For reference, the article is right and the company is making over a billion dollar per quarter with about 500 employees. That should give you an idea of budget available to cover up and retaliation (it’s a shame the company forgot to put few pounds of budget aside to cover notice period and non-compete!).

Current and future employees of Hudson River should be afraid.

That should also give an idea how much money is on the table, personally for partners and high level employees on the other side, or employees who are committing embezzlement. A 7 or low 8 figures sum is peanuts for the company. People do get killed daily for much less than that.

Ownership

The first new information that came up: Jason Caroll is the owner and founder of the company. I did not know that!

There are three partners in Hudson River Trading: Prashant Lal, Jason Caroll, and Oaz Nir.

- Oaz Nir stopped actively running the company years ago, as far as I am aware. Never saw him.

- Prashant came much later; he’s giving quarterly presentations and signed all the unlawful documents to retaliate against me.

- No idea what was the involvement of Jason. Never saw him.

When I escalated the issue to the partners and UK employees, there was a global reply from a Jason but with a different surname. He said that he would personally look into that. No idea who that guy was until now, Jason is the owner and founder of the company. Obviously there was zero follow up on his part, employees got fired and threatened, and the holiday issue was never addressed.

I’ve many times wanted to talk to “whoever” runs Hudson River Trading. It’s ridiculous how every single thing the company has done is blatantly wrong and illegal. Surely the owner would not approve of that if he was aware.

@Jason: Your employees are stealing from you to threaten other employees and cover up their wrongdoings.

Embezzlement

In Hudson River Trading, there were only two Human Resource employees L. and R. These are the only two employees to raise employment questions or concerns. (Note there are recruiters who do outreach and go to university job fairs, these are not Human Resources per se). The first part of the disclosure is mostly with L., the second part is mostly with R.

This is formally explained in a wiki page. If there is any kind soul left at Hudson River Trading, please find and take a full screenshot of the wiki page to raise HR question (whole screen including URL bar) and send to the FCA whistle (at) fca.org.uk with subject “#53063 Hudson River Trading”.

Just found out after the Bloomberg article. R is not just Human Resources. She is a qualified lawyer in the New York bar. She is running both Human Resources AND is the lawyer of the company AND is the person executing all contracts on behalf of the company. That’s a lot of responsibility for a single individual, all with zero oversight. She never stated she was a lawyer and was representing the company. I always thought she was the manager/lead for Human Resources.

I believe this is an extreme form of conflict of interest. Everything is run by the same person. What would regulators think about that?

If employees read and follow the procedure to contact Human Resources, they may be contacting the lawyer instead, who may be preparing their termination. Is it normal for the lawyer of the company to be the contact for Human Resources?

Since R is not Human Resources, that means there is ONE SINGLE Human Resource employee, L, who is all alone. Is it normal for a company of 500+ employees to have only a single Human Resource person?

Is it reasonable for a regulated financial company with $4B in cash and a two digits percent of all equities trades in the US market, to have a human resource department consisting of a single new graduate? What would regulators think about that?

The entire time I thought I just needed to reach anybody who can order a contract review to correct holidays. There is nobody in the company who can process any of this. That makes sense now. There’s one single person controlling everything to cover her wrongdoing. She had me terminated and had my laptop erased the second I raised a concern she couldn’t bury. She personally threatened me for legal action if I were to disclose anything about what happened because that would highlight her wrongdoing and put her job in jeopardy.

See the elephant in the room?

This is a perfect setup to cover wrongdoing and embezzlement.

I am formally reporting Roberta Yuan, representing Human Resources and Legal of the company, to be formally investigated for embezzlement.

She’s terminated me and she personally threatened me to stay silent, or otherwise she would pursue me on behalf of the company. She personally contracted lawyers and executed contractual agreements on behalf of the company to retaliate and silence employees, her actions could not have been done on behalf of the company because it is illegal and exposes the company to enormous fines and regulatory actions, meaning it was done on her own initiative but using Hudson River Trading for the express purpose of covering her own wrongdoings. That ought to fall under the definition of embezzlement. https://en.wikipedia.org/wiki/Embezzlement

I sincerely believe that both Human Resources and Legal functions in Hudson River Trading are compromised.

It’s catastrophic. Only god knows what else may be covered up. Every contract from the company may have had similar treatment as those in this article. Any employee who report any concerns will be terminated and threatened the same way I was. Any next employee who joins Human Resources or legal will be faced with a walls of problems and risk their careers. For reference, there was a recruitment person who was fired suddenly in great fanfare earlier this year, I think she’d have to work with HR and legal closely as part of her work, what did she come across that pushed the company to instant fire?

The involvement of Prashant Lal remains unclear, except for signing all the documents and being responsible for everything. I never talked to him. (It’s extremely fishy. Why would anybody do that? There must be critical information that’s missing?).

This article is a protected disclosure for exceptional wrongdoing https://www.handbook.fca.org.uk/handbook/glossary/G3485p.html

Reporting Concerns to the FCA

I encourage all employees, both current and previous employees, to report concerns and any wrongdoings to the FCA. You can provide tips, conversations and documents to the FCA directly to whistle (at) fca.org.uk subject #53063. There is no not need to be in the UK to assist the FCA. Employees from all over the world can contribute and make the world a safer place.

No human being should be treated and threatened like Hudson River Trading has done. It’s wrong. It has gone way too far.

I tried to but I can’t live with the constant fear over my head that the company will turn around any day and try to take anything I have. I can’t live knowing that they will do the exact same procedure to me to the next person. It’s not human.

Final Disclosure

Now is December 2021, the next bank Holidays will be soon on 27th December 2021, known as Boxing Day.

Boxing Day was a closed day, employees were not expected to work on that day and did not need to deduct one day from their allowance, it was formally written in the company policy, until the wiki was unilaterally edited to remove that day, an edit which was unlawful.

What’s the result? Are employees supposed to work or not? Who knows?

Please clarify holidays.

Please stop firing people for being off on bank holidays when no employee can comprehend what days they are required to work or not.